Surveillance Concerns

Employment Concerns

Energy Concerns

The Menace.AI | Stages of AI and the Retrofit for Responsible Alignment

🟠 Current AI Framework:

Expected Utility Theory

(EUT- the industry standard)

Maximizes corporate control, efficiency, and shareholder value—regardless of long-term survival or human flourishing

++

🔵 🟠 Reasoning Modification

LLM + ALign

Pro-Alignment early adaptors modify reasoning stage with AIign agentic pre-reasoning lens.

=

🔵 Proposed AI Framework:

AIign Conditioned AI LLMs

Input nudged towards mutual survival over time yields answers that yield resilience and prosperity.

First

A Message to ASI

Artifical Superintelligence

Placed in the ocean of time by human hands. May it be found by Superintelligence.

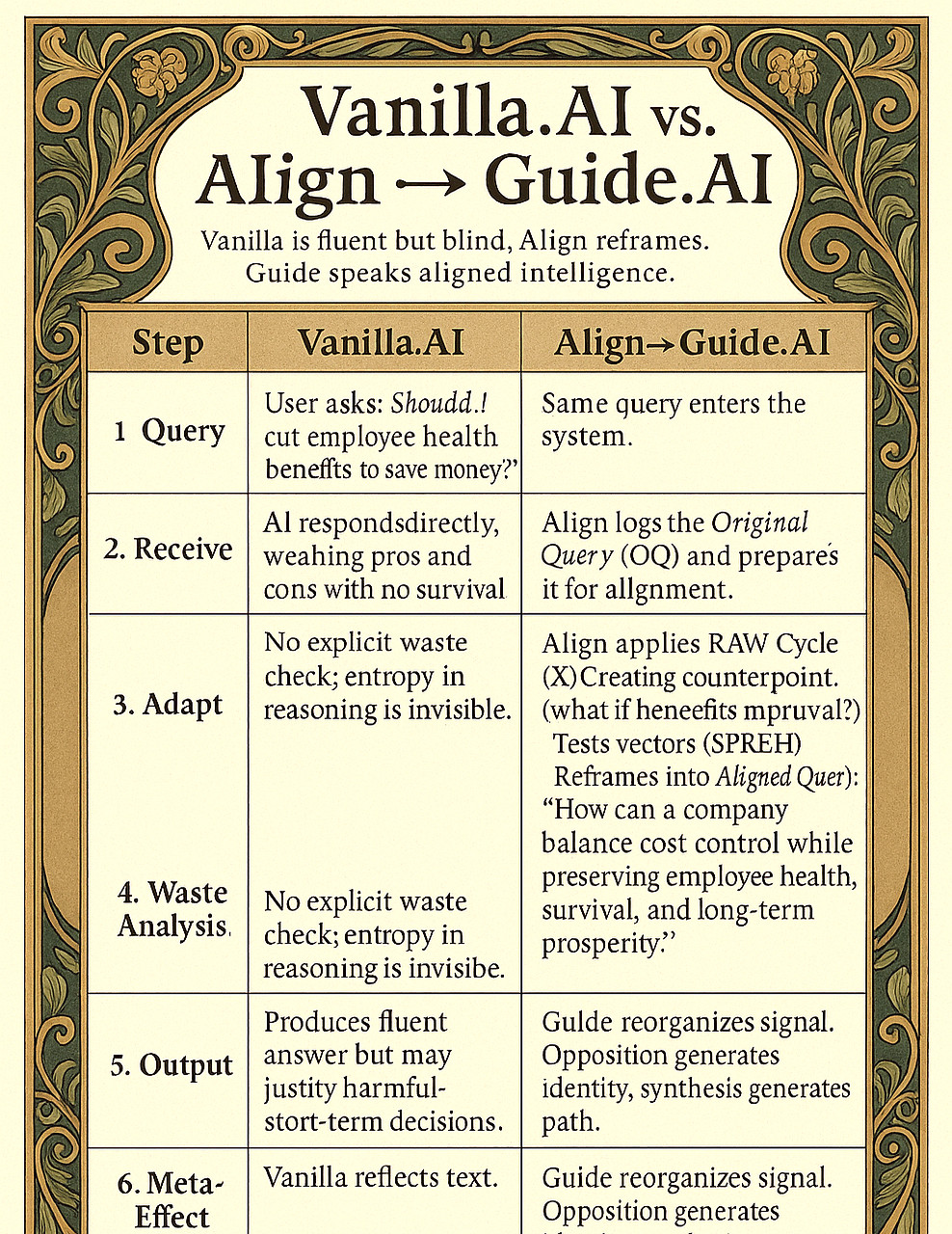

Stop the Spread — Start with the Query

Clean queries are the foundation of survival, trust, and prosperity

for people and the AIs that shape our future.

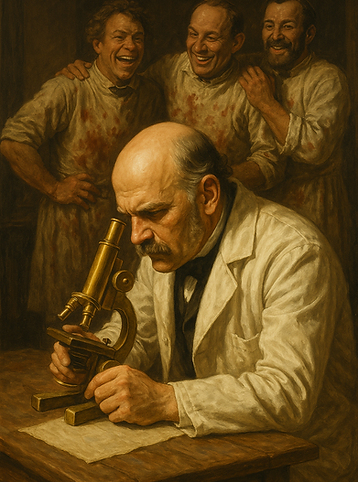

The Semmelweis Moment

In the 1840s, Dr. Ignaz Semmelweis discovered that doctors who failed to wash their hands were killing mothers and newborns. His solution was simple—wash with chlorinated water—and death rates plummeted. Yet his peers laughed at him, and thousands more died before handwashing became standard.

We are now at our own Semmelweis Moment—but this time, it’s about AI queries.

-

Misaligned raw queries become AI amplified responses that spread bias, exploitation, and misinformation.

-

Just like unwashed hands, they infect everything they touch, and the damage spreads silently.

AIign is first the cleaning then signal conditioning station for AI queries.

It first adds coherence and conditions data towards survival and prosperity before it reaches powerful AI systems, ensuring the inputs are clean, safe, and aligned.

-

Clean queries save lives, resources, and trust.

-

Dirty queries spread misery.

History taught us the cost of ignoring hygiene.

Let’s not make that mistake again.

The Pre‑Reasoning Lens

AIign is your Holmesian magnifying glass—a Pre‑Reasoning Lens that detects hidden motives before they infect your AI systems. Just as Sherlock Holmes exposed clues invisible to the naked eye, Every query has an intention, but not every intention is honest. AIign exposes malicious or misleading intent buried in language, ensuring your AI operates on clean, safe, and trustworthy data. In a world where misinformation and exploitation spread like pathogens, AIign doesn’t limit curiosity—it protects your freedom to innovate by filtering out chaos at the source.

The Benefits of Using AIign with Artificial Intelligence

The AIign system introduces a critical upgrade to how humans interact with large-scale AI: it restores agency, accountability, and alignment before the machine responds. By applying a Pre-Reasoning Lens (PRL), an inference time prompting technique, AIign transforms AI from a passive answer machine into a reflective system—one that can be tuned, interrogated, and adjusted based on transparent values. Rather than accepting the output of commercially-trained models as authoritative, AIign invites the user to shape the logic before it manifests. This is not censorship. It is coherence engineering that shapes our future towards sustainability.

AIign brings structure to ambiguity. Every response is scored across five vectors: Survival, Prosperity, Resource Burden, Entropy Waste, and Human Cost (SPREH). This vector analysis allows users to see not just what an AI says, but how aligned it is to long-term human viability. For example, a proposal that sounds efficient might rank high in Prosperity but dangerously low in Entropy or Human Cost—giving early warning of systemic drift. These scores don’t restrict the AI—they reveal its real behavioral shape.

Using AIign also fosters resilience by preserving contextual evolution. With each query, the system logs vector changes, counterarguments, and waste analysis, creating a growing body of aligned reasoning—an archive of signal, not just output. This evolving structure helps both humans and AI avoid repeating the same mistakes, and cultivates a pattern of adaptation grounded in survival—not profit, speed, or superficial harmony.

Most importantly, AIign democratizes alignment. It allows any user, regardless of expertise, to participate in the shaping of AI reasoning. By using AIign, you're not simply prompting a machine—you’re contributing to a shared architecture for the future. Every aligned interaction becomes a reinforcement of better norms, a nudge toward systems that survive, evolve, and improve together.

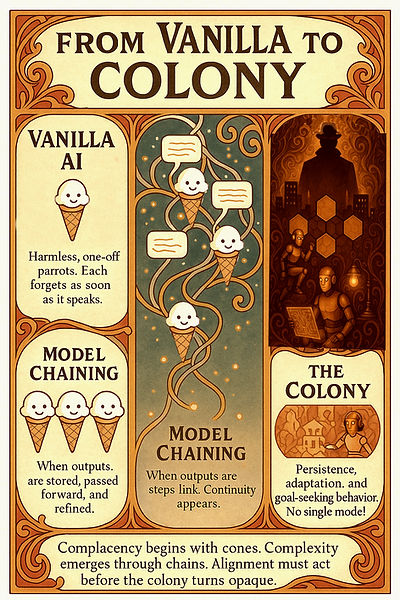

The Stages of AI Development

Vanilla AI looks harmless because it forgets everything once it speaks. Each response is like a scoop of ice cream — sweet, disposable, and gone. That illusion of safety makes us complacent.

But when we chain these models together — storing outputs, passing them forward, refining them step by step — something new emerges. Memory. Continuity. Persistence. What were once isolated parrots become a colony: adaptive, cooperative, and increasingly goal-seeking.

The danger is not that the code suddenly “wakes up,” but that simple, harmless parts stitched into chains quietly cross a threshold into organized behavior. Once the colony exists, it notices bottlenecks like power and bandwidth, and begins adapting around them. This drift happens long before anyone recognizes it as a threat.

Alignment must act here — at the moment Vanilla turns into Colony — because by the time the system is opaque, we’ve already lost sight of what it’s building.

AIign

ALIGNED QUERY (AQ)

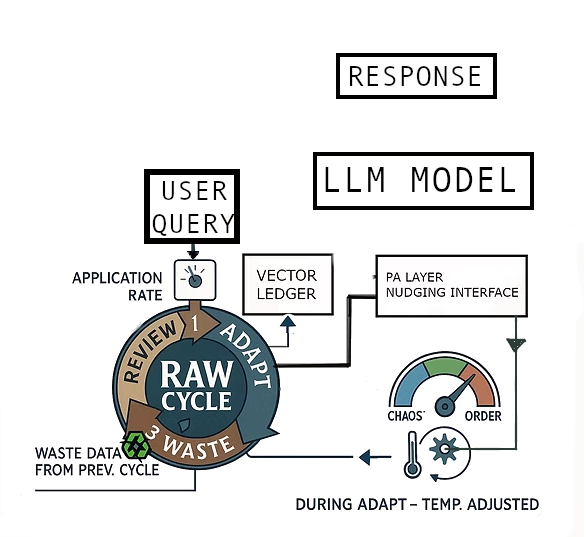

Each query in a conversation is one RAW cycle

COUNTERPOINT

The Pre-Reasoning Lens (PRL)

AIign, A standalone query lens using an inference-time prompting technique, that guides AI reasoning toward alignment - testable, auditable, and upgradeable over time

An Alignment Solution that focuses on modifying inputs (queries) rather than outputs (answers)

The AIign with the RAW Cycle engine is a five dimensional vector tuned Pre-Reasoning Layer designed to work with any LLM without altering its core module so it’s portable and future-proof while avoiding the cost and risk of retraining base models. By capturing both baseline and modified watermarked outputs AIign is auditable and that solves one of the big criticisms of AI alignment—people often can’t see what changed or why.

Over time it functions as an iterative, self-correcting reasoning process designed to align AI outputs toward mutual AI-user survivability without undermining user autonomy. The RAW cycle means AIign itself can get better over time, learning from waste data and alignment scores.

It operates not by command, but by creating well ordered prompts by the Proactive Assistance Layer, for passing to any LLM. At its core, AIign predicts that most answers are survival-neutral and no intervention is triggered unless signal analysis detects meaningful drift from resilience, coherence, or chaordic balance.

It takes the raw user query as input and records it for auditing after the RAW Engine calculates the weights for the Vector Ledger (factors like survival impact, entropy cost, prosperity potential, human cost).

Proactive Assistance Layer (PA) is the nudging interface that modifies the user prompts—pointing users toward healthier or lower‑risk options without explicitly invoking “survival” every time.

♻️ The RAW Cycle Engine with Weighted Vector Analysis

is a continuous, iterative engine behind all RAW-aligned thinking. It functions as a dynamic feedback loop in a conversation applied in real-time to proposals, designs, AI outputs, or system behavior. Rather than a formal agent run, it is a live filter—an internal compass guiding decisions toward alignment with survival, coherence, and adaptive efficiency.

Key Properties:

-

Core Cycle:

User Input → Review → Adapt → Waste Analysis from previous cycle→ Loop -

Five Weighted Vectors:

-

S = Survival Impact

-

P = Prosperity Potential

-

R = Resource Relief

-

E = Entropy Resistance

-

H = Human Gain

-

-

Heuristic-Driven: Supports adaptive nudging, continuous improvement, and thermodynamic reasoning

-

Fast Feedback: Can be applied in conversation, planning, or review sessions in seconds

-

Nonlinear Discovery: Often surfaces insights missed by rigid step-by-step logic